|

Hello, I am Rabeya Akter. I hold a B.Sc. in Robotics and Mechatronics Engineering from the University of Dhaka, where I worked under Dr. Shafin Rahman and Dr. Sejuti Rahman on continuous Bangla sign language translation. More recently, my work has focused on time series forecasting, robotic manipulation, and visual scene understanding, including amodal counting and object reasoning in cluttered or occluded environments, exploring how multimodal representations can improve perception and decision-making in complex tasks. Previously, I have worked at Therap BD Ltd. as an Associate Software Engineer (QA), where I led quality assurance efforts for several machine learning systems. I also interned at Pathao Limited, where I worked on data science projects. Beyond research and engineering, I enjoy mentoring in olympiads, engaging in hackathons, and exploring cultural activities such as writing and performing. I am currently actively looking for opportunities to pursue a PhD starting in Fall 2026. Research Interest: Multimodal Learning, Visual Understanding, Language Grounding, Embodied AI Connect with me! |

|

News and UpdatesSeptember 22, 2025: September 22, 2025: February 14, 2025: March 14, 2024: January 24, 2024: January 28, 2024: September 25, 2021: |

ResearchI'm interested in natural language processing, computer vision, machine learning, multimodal learning, human-robot interaction and robotics. |

Completed |

|

|

Counting Through Occlusion: Framework for Open World Amodal CountingSafaeid Hossain Arib, Rabeya Akter, Abdul Monaf Chowdhury, MD Jubair Ahmed Sourov, MD Mehedi Hasan Under Review at CVPR 2026 [arxiv] [code] Abstract: Object counting has achieved remarkable success on visible instances, yet state-of-the-art (SOTA) methods fail under occlusion, a pervasive challenge in real-world deployment. This failure stems from a fundamental architectural limitation where backbone networks encode occluding surfaces rather than target objects, thereby corrupting the feature representations required for accurate enumeration. To address this, we present CountOCC, an amodal counting framework that explicitly reconstructs occluded object features through hierarchical multi-modal guidance. Rather than accepting degraded encodings, we synthesize complete representations by integrating spatial context from visible fragments with semantic priors from text-visual embeddings, generating class-discriminative features at occluded locations across multiple pyramid levels. A teacher-student supervision anchors reconstructed features to their unoccluded counterparts, preserving properties essential for accurate counting. For rigorous benchmarking, we establish occlusion-augmented versions of FSC-147 and CARPK spanning both structured and unstructured scenes. Our framework, CountOCC achieves SOTA performance on FSC-147 with a 32.2% MAE reduction over prior baselines under occlusion. CountOCC also demonstrates exceptional generalization by setting new SOTA results on CARPK with a 40.7% MAE reduction and on CAPTURE-Real with a 40.0% MAE reduction, validating robust amodal counting across diverse visual domains. Code will be released soon. |

|

|

LaGEA: Language Guided Embodied Agents for Robotic ManipulationAbdul Monaf Chowdhury, Akm Moshiur Rahman Mazumder, Rabeya Akter, Safaeid Hossain Arib Under Review at ICLR 2026 [arxiv] [code] Abstract: Robotic manipulation benefits from foundation models that describe goals, but today’s agents still lack a principled way to learn from their own mistakes. We ask whether natural language can serve as feedback, an error-reasoning signal that helps embodied agents diagnose what went wrong and correct course. We introduce LAGEA (Language Guided Embodied Agents), a framework that turns episodic, schema-constrained reflections from a vision language model (VLM) into temporally grounded guidance for reinforcement learning. LAGEA summarizes each attempt in concise language, localizes the decisive moments in the trajectory, aligns feedback with visual state in a shared representation, and converts goal progress and feedback agreement into bounded, step-wise shaping rewards whose influence is modulated by an adaptive, failure-aware coefficient. This design yields dense signals early when exploration needs direction and gracefully recedes as competence grows. On the Meta-World MT10 embodied manipulation benchmark, LAGEA improves average success over the state-of-the-art (SOTA) methods by 9.0% on random goals and 5.3% on fixed goals, while converging faster. These results support our hypothesis: language, when structured and grounded in time, is an effective mechanism for teaching robots to self-reflect on mistakes and make better choices. Code will be released soon. |

|

|

T3Time: Tri-Modal Time Series Forecasting via Adaptive Multi-Head Alignment and Residual FusionAbdul Monaf Chowdhury, Rabeya Akter, Safaeid Hossain Arib Accepted at AAAI 2026 Main Technical Track [arxiv] [code] Abstract: Multivariate time series forecasting (MTSF) seeks to model temporal dynamics among variables to predict future trends. Transformer-based models and large language models (LLMs) have shown promise due to their ability to capture long-range dependencies and patterns. However, current methods often rely on rigid inductive biases, ignore intervariable interactions, or apply static fusion strategies that limit adaptability across forecast horizons. These limitations create bottlenecks in capturing nuanced, horizon-specific relationships in time-series data. To solve this problem, we propose T3Time, a novel trimodal framework consisting of time, spectral, and prompt branches, where the dedicated frequency encoding branch captures the periodic structures along with a gating mechanism that learns prioritization between temporal and spectral features based on the prediction horizon. We also proposed a mechanism which adaptively aggregates multiple cross-modal alignment heads by dynamically weighting the importance of each head based on the features. Extensive experiments on benchmark datasets demonstrate that our model consistently outperforms state-of-the-art baselines, achieving an average reduction of 3.37% in MSE and 2.08% in MAE. Furthermore, it shows strong generalization in few-shot learning settings: with 5% training data, we see a reduction in MSE and MAE by 4.13% and 1.91%, respectively; and with 10% data, by 3.70% and 1.98% on average. Code is available at: https://github.com/monaf-chowdhury/T3Time |

|

|

SignFormer-GCN : Continuous Sign Language Translation Using Spatio-Temporal Graph Convolutional NetworksSafaeid Hossain Arib, Rabeya Akter, Sejuti Rahman, Shafin Rahman WiML Workship, Neurips 2025 [Extended Abstract]; PLOS One [Full Paper] [paper] [code] [Extended Abstract] Abstract: Sign language is a complex visual language system that uses hand gestures, facial expressions, and body movements to convey meaning. It is the primary means of communication for millions of deaf and hard-of-hearing individuals worldwide. Tracking physical actions, such as hand movements and arm orientation, alongside expressive actions, including facial expressions, mouth movements, eye movements, eyebrow gestures, head movements, and body postures, using only RGB features can be limiting due to discrepancies in backgrounds and signers across different datasets. Despite this limitation, most Sign Language Translation (SLT) research relies solely on RGB features. We used keypoint features, and RGB features to capture better the pose and configuration of body parts involved in sign language actions and complement the RGB features. Similarly, most works on SLT research have used transformers, which are good at capturing broader, high-level context and focusing on the most relevant video frames. Still, the inherent graph structure associated with sign language is neglected and fails to capture low-level details. To solve this, we used a joint encoding technique using a transformer and STGCN architecture to capture the context of sign language expressions and spatial and temporal dependencies on skeleton graphs. Our method, SignFormer-GCN, achieves competitive performance in RWTH-PHOENIX-2014T, How2Sign, and BornilDB v1.0 datasets experimentally, showcasing its effectiveness in enhancing translation accuracy through different sign languages. The code is available at the following link: https://github.com/rabeya-akter/SignLanguageTranslation. |

|

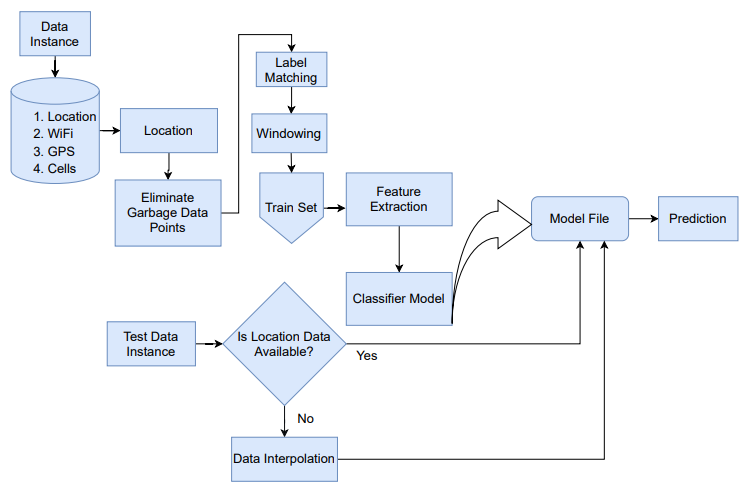

Classical machine learning approach for human activity recognition using location dataSafaeid Hossain Arib, Rabeya Akter, Sejuti Rahman, Shafin Rahman UbiComp/ISWC '21 Adjunct: Adjunct Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2021 ACM International Symposium on Wearable Computers [paper] [code] Abstract: The Sussex-Huawei Locomotion-Transportation (SHL) recognition Challenge 2021 was a competition to classify 8 different activities and modes of locomotion performed by three individual users. There were four different modalities of data (Location, GPS, WiFi, and Cells) which were recorded from the phones of the users in their hip position. The train set came from user-1 and the validation set and test set were from user-2 and user-3. Our team ’GPU Kaj Kore Na’ used only location modality to give our predictions in test set of this year’s competition as location data was giving more accurate predictions and the rest of the modalities were too noisy as well as not contributing much to increase the accuracy. In our method, we used statistical feature set for feature extraction and Random Forest classifier to give prediction. We got validation accuracy of 78.138% and a weighted F1 score of 78.28% on the SHL Validation Set 2021. |

|

|

Bornil : An open-source sign language data crowdsourcing platform for AI enabled dialect-agnostic communicationShahriar Elahi Dhruvo, Mohammad Akhlaqur Rahman, Manash Kumar Mandal, Md Istiak Hossain Shihab, A. A. Ansary, Kaneez Fatema Shithi, Sanjida Khanom, Rabeya Akter, Safaeid Hossain Arib, M.N. Ansary, Sazia Mehnaz, Rezwana Sultana, Sejuti Rahman, Sayma Sultana Chowdhury, Sabbir Ahmed Chowdhury, Farig Sadeque, Asif Sushmit [arxiv] Abstract: The absence of annotated sign language datasets has hindered the development of sign language recognition and translation technologies. In this paper, we introduce Bornil; a crowdsource-friendly, multilingual sign language data collection, annotation, and validation platform. Bornil allows users to record sign language gestures and lets annotators per form sentence and gloss-level annotation. It also allows validators to make sure of the quality of both the recorded videos and the annotations through manual validation to develop high-quality datasets for deep learning based Automatic Sign Language Recognition. To demonstrate the system’s efficacy; we collected the largest sign language dataset for Bangladeshi Sign Language dialect, perform deep learning based Sign Language Recognition modeling, and report the benchmark performance. The Bornil platform, BornilDB v1.0 Dataset, and the codebases are available on https://bornil.bengali.ai. |

ProjectsIncluded here are projects completed as part of coursework, as well as independent personal endeavors. |

Course Projects |

|

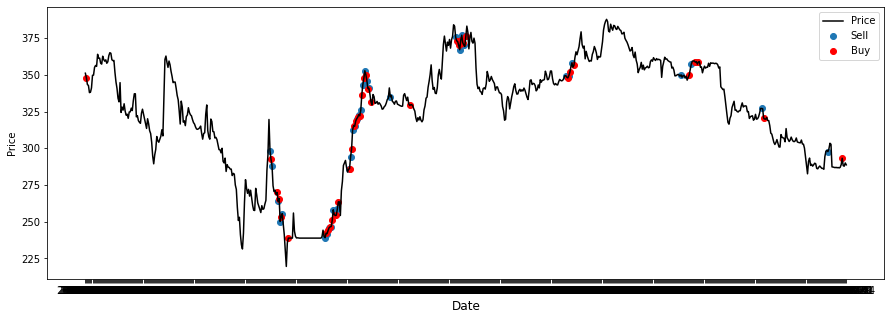

Automated Stock Trading Using Approximate Q LearningRME 3211 (Intelligent Systems and Robotics Lab) [code] Developed an automated stock trading system using approximate Q-learning to optimize buy, sell, and hold decisions for three Bangladeshi stocks, achieving an 11% return on investment and demonstrating the effectiveness of reinforcement learning in financial decision-making. |

|

A Comprehensive Comparative Analysis of Emotional Support Delivery by NAO Robots and Humans Across Varied Emotional StatesRME 4211 (Human Robot Interaction Lab) [code] Conducted a comparative analysis of emotional support delivery by NAO robot and humans across 3 emotional states, developing a system and questionnaire to quantify emotional impact, comfort levels, communication clarity, and overall satisfaction, with the goal of assessing the efficacy of robotic versus human emotional support and identifying areas for improvement. |

Personal Projects |

|

Project HeatAlert: Predicting Heatwaves in DhakaThis project aims to develop a machine learning-based system to forecast heatwave events in Dhaka city using historical weather data. By analyzing patterns in temperature, humidity, and other meteorological indicators, the model provides early warnings to help mitigate the impact of extreme heat on public health and urban infrastructure. |

EducationBelow is a concise summary of my academic background to date. |

|

■ Bachelor of Science in Robotics & Mechatronics

Engineering [2019–2024]

■ Higher Secondary School Certificate (HSC) [2016–2018]

■ Secondary School Certificate (SSC) [2006–2016] |

Work ExperienceI am committed to pursuing a research-driven career, whether in academia or industry. Below are some highlights of my professional experiences so far. |

|

■ Associate Software Engineer, QA [Apr 2024 – May 2025]

■ AIM Intern [Jan 2024 –

Mar 2024]

■ Undergraduate Research Assistant [Jan 2023 – Jan 2024] |

Awards and ScholarshipsOver the years, I’ve been honored with a few recognitions—made possible by the invaluable support of mentors and peers who have greatly influenced my growth. |

■ Research Grant

■ Academic Scholarship

■ Hackathons

■ Others

|

LeadershipI dedicate part of my time to serving communities. Here are a few selected contributions. |

|

■ Student Activity Secretary [Jan 2023 – Dec 2023]

■Mentor [Jul 2019 –

Dec 2020]

■ Mentor [Jun 2024 –

Sep 2024]

■ Speaker: Dui Prishthar Bisheshoggo [Dec 2023] |

|

|